Astera Labs recently introduced is Aries 6 PCIe Gen6 retimers that support the CXL 3.x protocol that may become an indispensable component of next-generation servers whether they are meant for artificial intelligence (AI) training, high-performance computing (HPC), storage, or general-purpose applications. The company said it has shipped samples of the chip to all hyperscale cloud service providers (CSPs) and large server makers. The chip designer expects the product to ramp starting from 2025 in AI servers and be more widely used in 2026 and onwards.

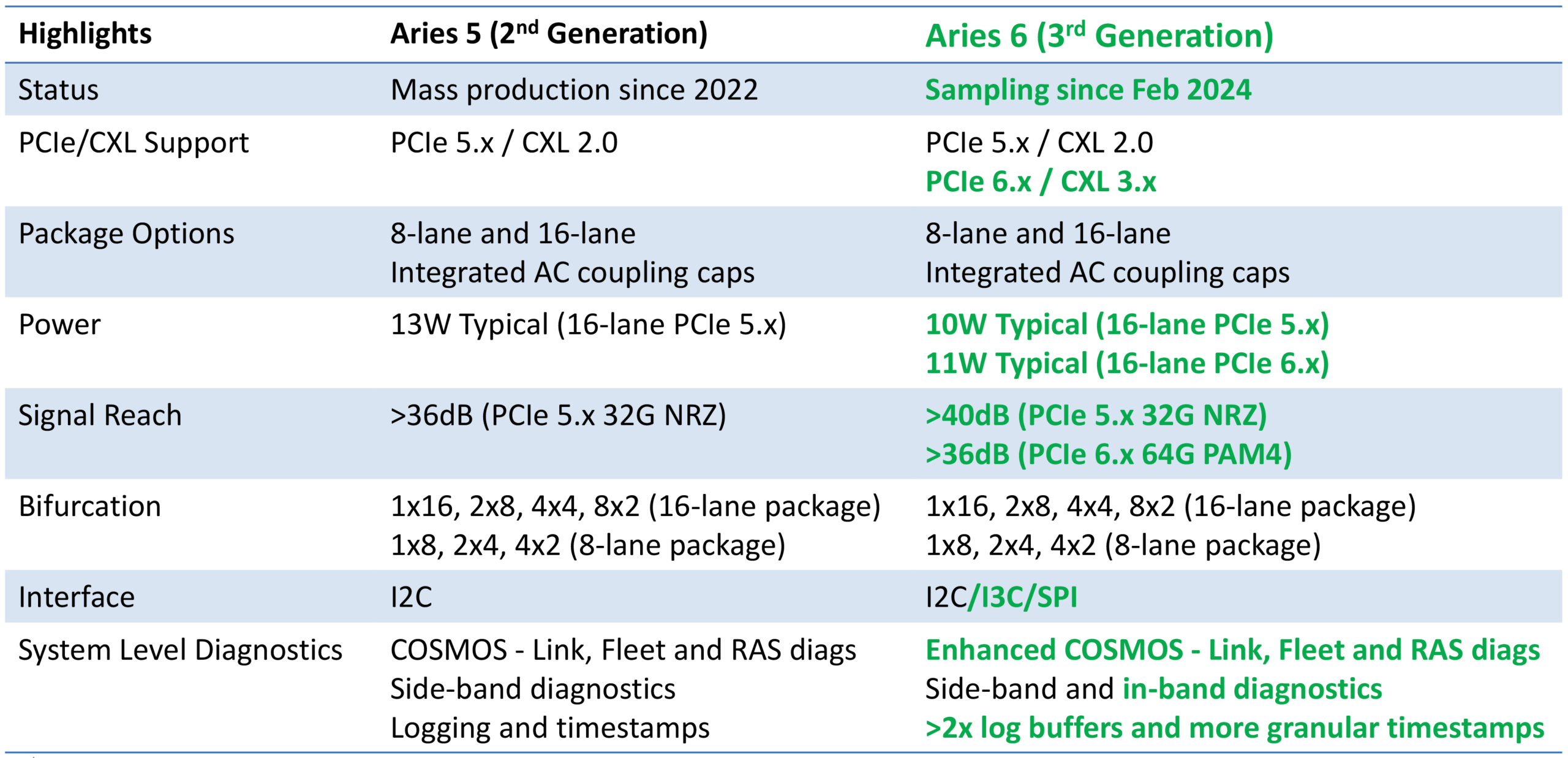

Its new Aries 6 PCIe/CXL smart DSP retimers are multifunctional bidirectional, 8-lane or 16-lane PCIe 6.2 retimers that support bifurcation, recognize the CXL 3.2 protocol, and allow for data-transfer speeds of up to 64 GT/s. The primary function of these retimers is to increase the PCIe trace distance between the root complex and its endpoints by up to three times (or to around 10 inches) while maintaining signal integrity, which is achieved by dynamically compensating for channel losses of up to 40 dB at 64 GT/s (which is better than what the PCIe specification requires, but more on this later).

One of the key selling points of the Aries 6 retimers is their relatively low power consumption of 11W, which is 2W lower compared to their closest rival and which promises to have an impact on power consumption of next-generation datacenters. Reducing power consumption of a PCIe Gen6 retimer to 11W (which is lower than many PCIe Gen5 retimers) is a big deal because PCIe 6.0 uses PAM4 signaling, which is quite expensive in terms of processing and therefore power consumption.

“The GPUs are transitioning to liquid cooled solutions, so what is left for air cooling needs to [be] as low power as possible,” said Ahmad Danesh, an associate VP for product line management at Astera Labs. “Given the sheer number of retimers that are needed in these [AI/HPC] platforms, it is extremely important that each one of them is as low power as possible.”

Unfortunately, Astera Labs does not disclose which process technology it uses to make its Aries 6 retimers, but it certainly is something advanced.

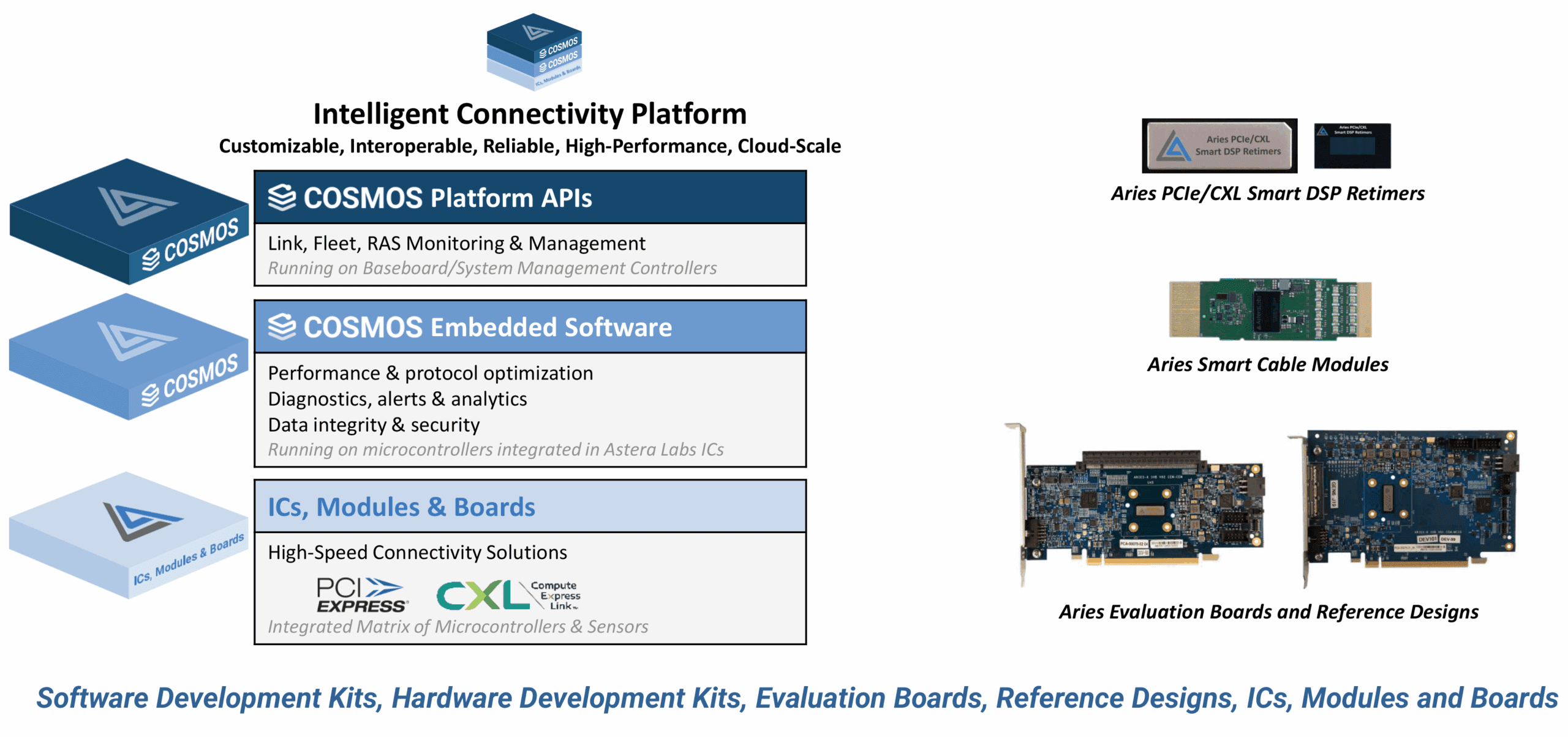

Astera Labs plans to offer its Aries 6 retimers in multiple industry-standard form-factors to enable chip-to-chip, box-to-box, and rack-to-rack connectivity. These PCIe Gen6 chips are fully supported by the company’s COSMOS software suite for link, fleet, and RAS management that, among other things, offers real-time protocol issue monitoring, targeted equalization for margin restoration, and integrated sensors for temperature and performance tracking. Considering the number of PCIe interconnections in modern servers, a software suite with link, fleet, and RAS management is a clear benefit. Meanwhile, OEM and ODM partners of AsteraLabs tend to integrate COSMOS into their own suites, so while end users may not experience COSMOS, they still use it.

Extending links length

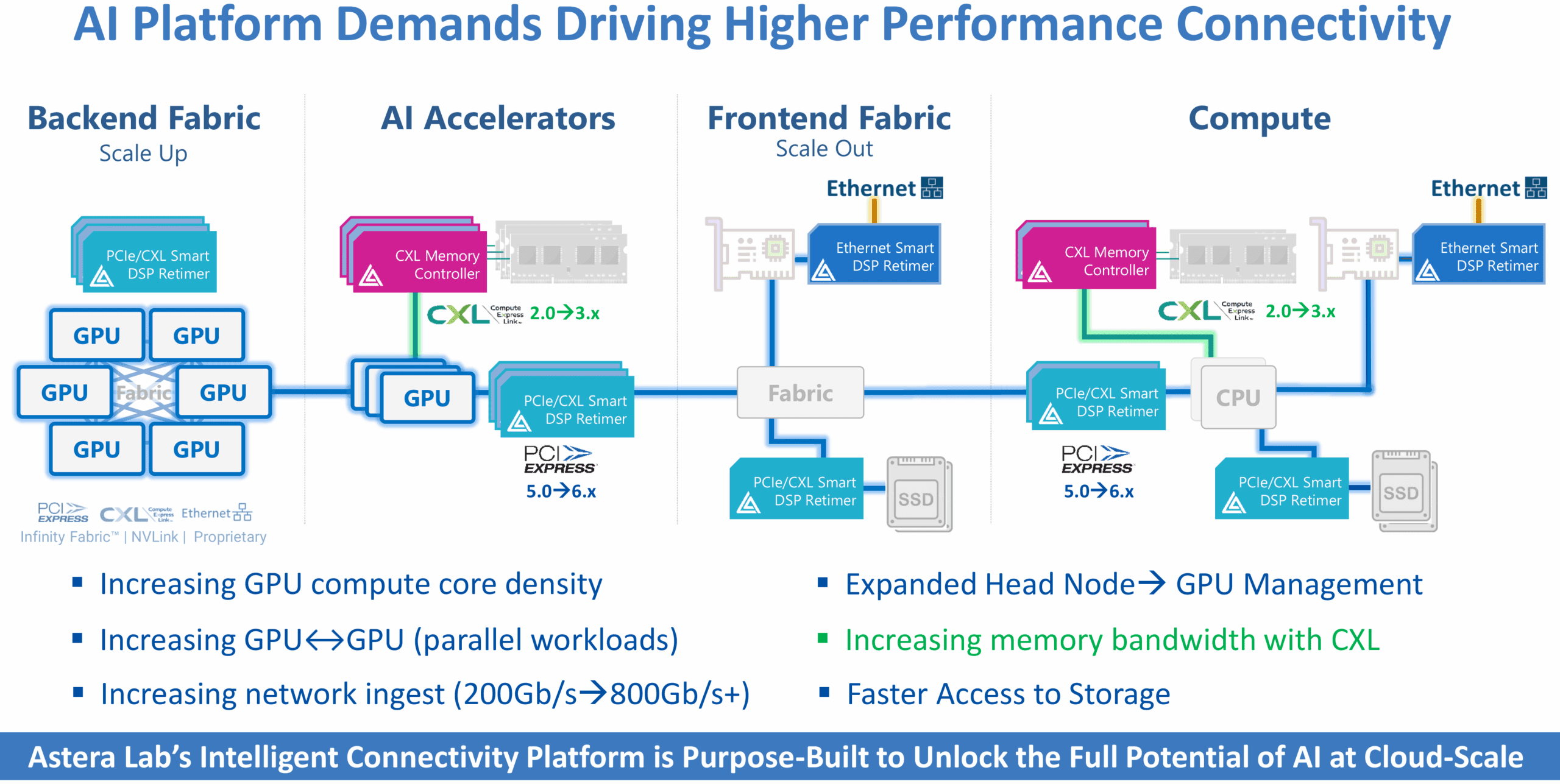

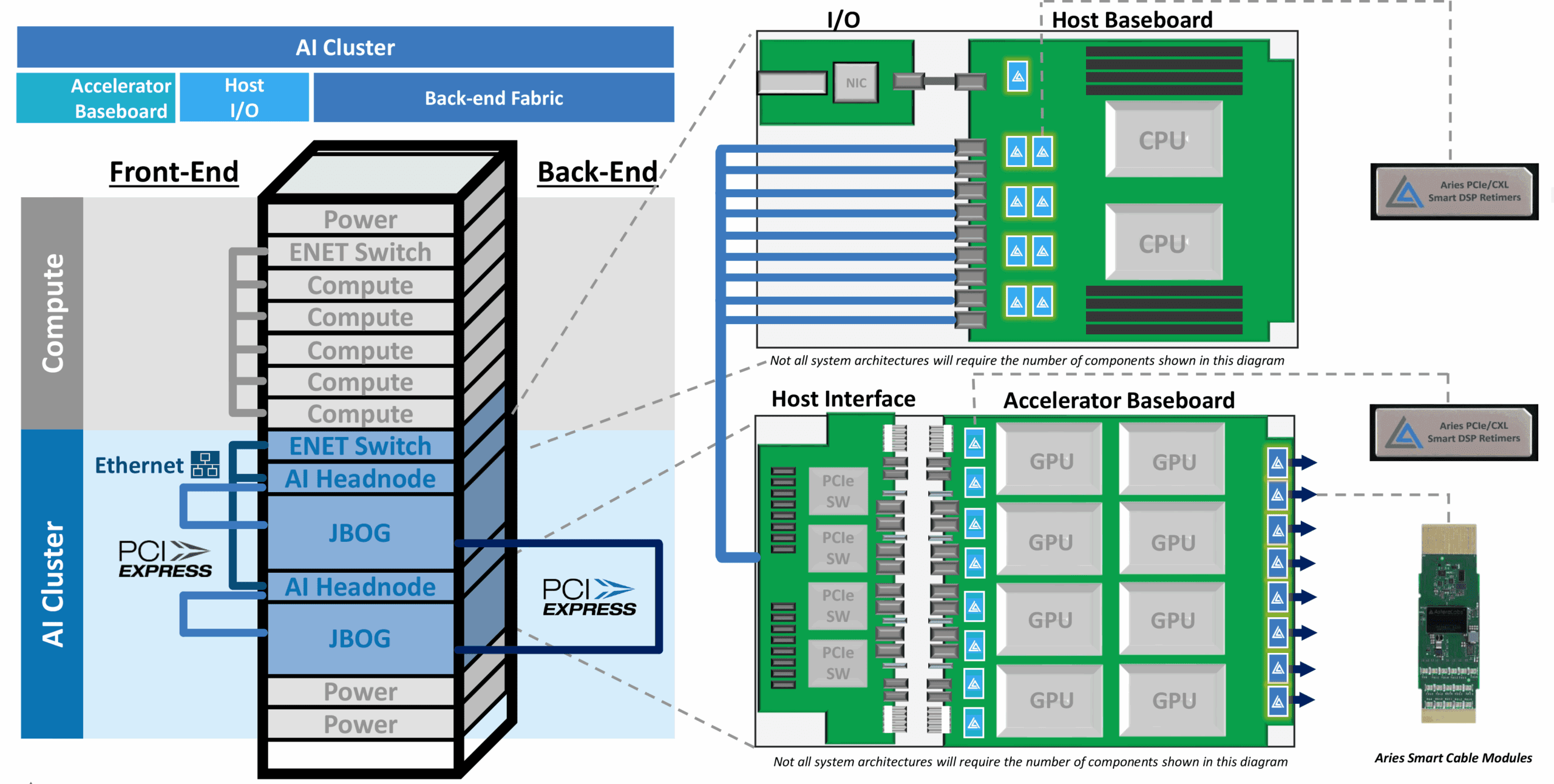

Servers used for artificial intelligence (AI) training and high-performance computing (HPC) applications are the most extreme and sophisticated computers that exist today. Programs running on these machines (or rather clusters of such machines) can consume all the resources they are provided, so AI and HPC servers tend to use the latest and greatest technology. Furthermore, it is important to maximize utilization of these vast compute resources. For AI clusters, connectivity performance is one of the factors that greatly affects GPU utilization, so bandwidth, latency, and reliability are must for these machines.

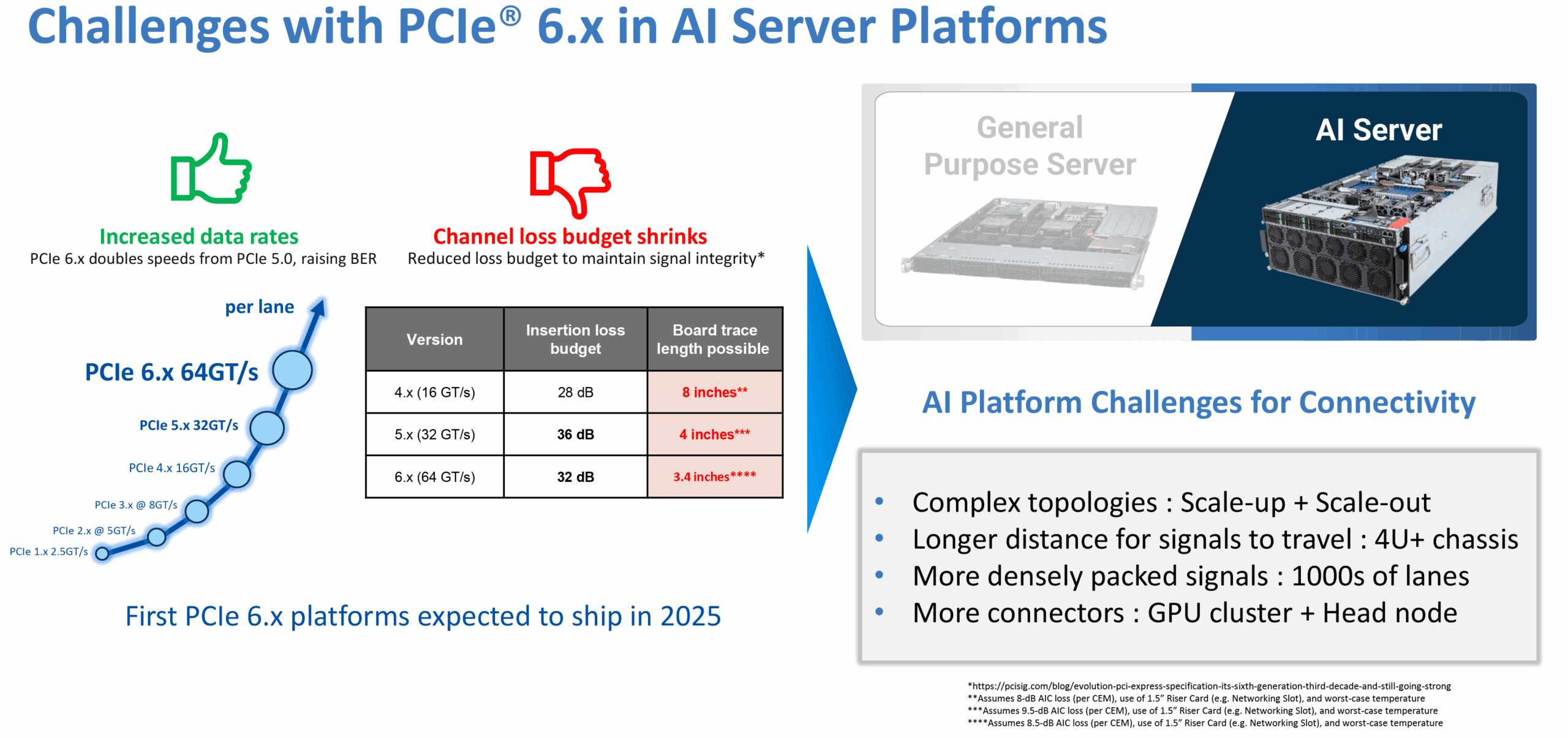

As data transfer rates of PCIe increases, copper trace length between a PCIe root complex and its endpoints decreases significantly due to signal loss, noise, and impendence. For example, a PCIe Gen4 board trace length could be up to 11 inches long at 16 GT/s with a channel loss budget of 28 dB, but with PCIe Gen6 at 64 GT/s, this length shrinks to 3.4 inches with a channel loss budget of 32 dB (though this depends on the choice of materials from low-loss to ultra-low–loss and environmental conditions).

While 3.4 – 4 inches could be enough to attach an SSD to a CPU in a client PC, this is clearly not enough to attach a GPU on a riser card to a CPU (which is perhaps why consumer-grade GPUs do not support PCIe Gen5). With servers, everything gets more complex, which is why PCIe retimers — compact mixed-signal analog/digital ICs that can receive data transmitted over a PCIe bus, separate the integrated clock, and transmit a refreshed version of the data with a clean and distinct clock signal — are now a critical component of modern servers featuring PCIe 5.x. They are going to get even more important with PCIe 6.x.

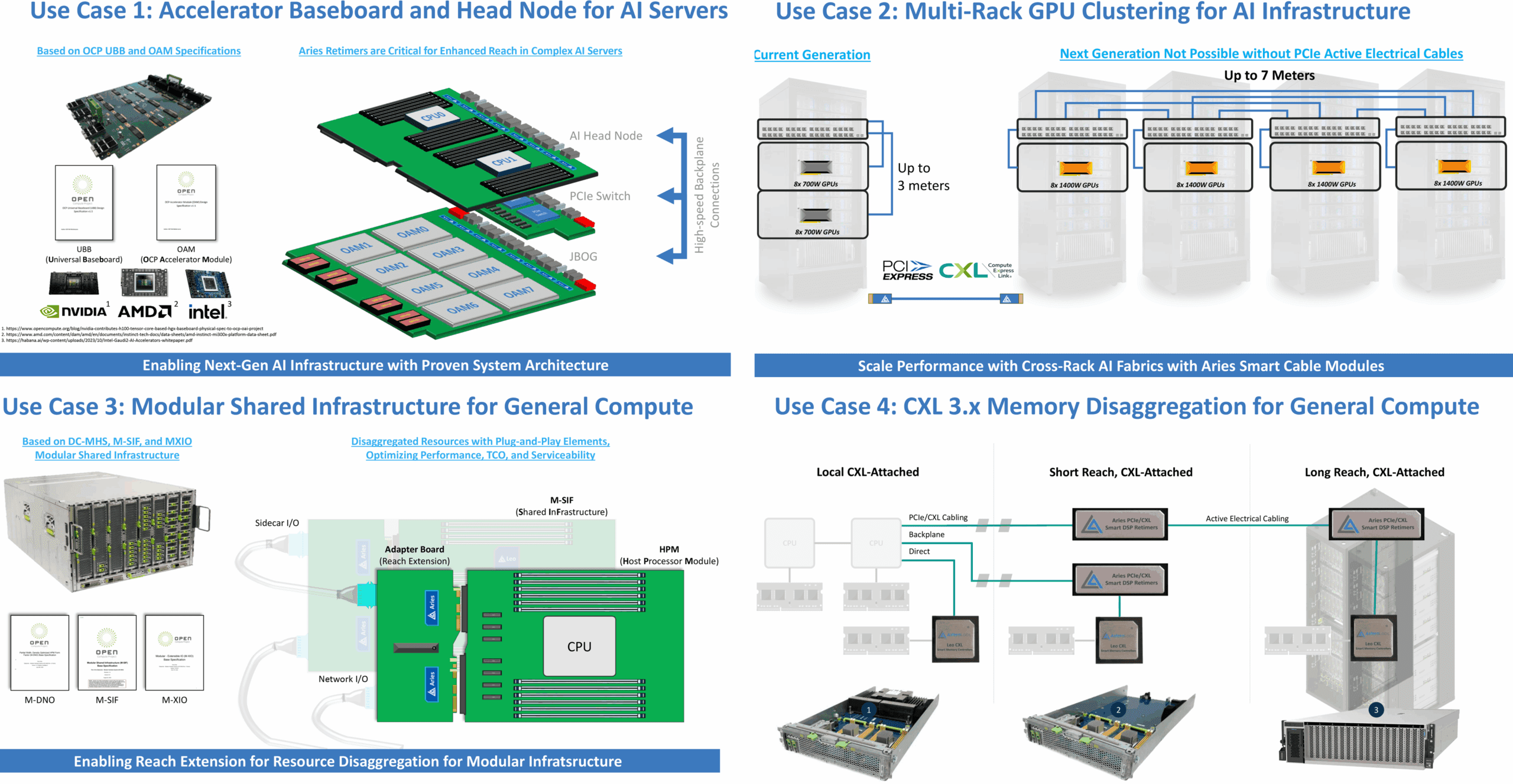

Usage of two retimers per PCIe Gen6 link can increase board trace length to around 10 inches, according to Astera Labs. Yet, trace length cannot be made infinite using retimers as only two retimers can be used for a single host-endpoint link, in accordance with PCIe specification. “AI is really going to be driving the demand in terms of the bandwidth,” Danesh said. “Following that, the next wave of deployments we are expecting is the CXL that the general-purpose compute servers are going to transition from PCIe Gen5 to PCIe Gen6 and CXL 3.1, depending on the CPUs, and our retimers can help address that reach and increase the bandwidth for those solutions.”

Given the limitations imposed by modern PCIe Gen5 and upcoming PCIe Gen6 standards, every compute GPU needs at least one PCIe retimer to connect to host CPU, according to Astera Labs. The same essentially applies to virtually all PCIe Gen5/Gen6-supporting devices and add-in-boards, whether they are CXL-enabled memory extenders, persistent memory modules, network controllers, and SSDs (though, these are going to lag behind).

“The last wave [to transit to PCIe Gen6] is really going to be storage,” said Danesh. “We are just seeing PCIe Gen5 storage is starting to ramp. So, we will expect Gen6 storage to lag here. But the retimers are important for these applications as well as we see our retimers are used today in a lot of PCIe Gen5 applications for storage already.”

To extend PCIe trace length, server makers can try to use ultra-low–loss exotic PCB materials, but given the sheer volumes of AI servers and limited availability of these materials, this is not really an option for high-volume applications, Astera Labs believes. It makes far more sense to use retimers, especially considering the fact that Aries 6 retimers perform better than the PCIe specification requires them to, according to the company.

“Channel loss budget of the PCIe spec is 36 dB at 32 GT/s and 32 dB at 64 GT/s, so we are doing 40 dB in both cases,” Danesh said. “One solution to [deal with signal loss is to use] more exotic board materials. But given the deployments of AI and how fast everyone is going, they cannot rely on these exotic board materials as they are not quite ready for high volume production in some cases. So, we get more reach so they can stay on the older generations of board materials for as long as possible for them to be able to scale up faster.”

A modern high-end AI or HPC server (featuring a host baseboard with CPUs and memory and an accelerator baseboard with GPUs) typically contains 17 – 20 retimers, depending on the number of network adapters. Meanwhile, large clusters must connect machines to each other and connecting them using a cross-rack AI fabrics and external Aries smart cable modules will further increase the number of PCIe retimers per box to at least 24.

“Nvidia GPUs supercharge generative AI and HPC applications, but powerful data connectivity is required to maximize their throughput,” said Brian Kelleher, senior vice president of GPU engineering at Nvidia. “Astera Labs’s new Aries smart DSP retimers with support for PCIe 6.2 will help enable higher bandwidth to optimize utilization of our next-generation computing platforms.”

Meanwhile, Astera Labs envisions that as next generation AI clusters will require more GPUs and more machines (see use case 2 on the picture in the next section), which will require either optical interconnects or PCIe active electrical cables, which will further increase usage of its products.

“[When it comes to outside the box connectivity, it depends on] how large is that mesh and how many GPUs [that] large mesh connects,” said Danesh. “Once you have an eight-GPU node talking to another node and to another node, a really large mesh, then the number of connectors [and connections] starts to increase exponentially.”

CXL 3.0 connectivity

Modern server platforms are aimed to a wide variety of applications and use cases, so they tend to support the Compute Express Link (CXL) protocols for efficient CPU-to-device, CPU-to-memory, and device-to-device connections.

The CXL 3.0 protocol is the latest version of the technology that brings substantial improvements over predecessors. Firstly, it is built on top of PCIe 6.0 and therefore doubles the per-lane data transfer rate to 64 GT/s and expands logical capabilities to support complex connection topologies and more flexible memory sharing configurations. Secondly, the specification also enhances cache coherency and memory sharing protocols, allowing for direct peer-to-peer connectivity between devices and true memory sharing between hosts. Finally, CXL 3.0 supports multi-level switching and global fabric attached memory (GFAM), enabling more complex network topologies and efficient multi-node setups (see use case 4).

The Aries 6 PCIe retimers fully support CXL 3.x features, which will enable building pools of devices on CXL/PCIe fabric and CXL 3.x memory disaggregation for accelerated or general compute. Meanwhile, Intel believes that CXL 3.1 support can enable many benefits for AI systems as well.

“PCIe 6.0 interconnects supporting 64 GT/s data speeds will enhance Intel’s latest platforms designed to run next generation AI workloads,” said Zane Ball, corporate VP, general manager data center and AI product management, Intel, said. “We applaud Astera for their investment in PCIe 6/CXL 3.1 ecosystem and their contributions toward the development of Intel’s retimer supplemental specification, which will accelerate the rollout of generative AI deployments at scale.”

Sampling since February

Astera Labs began sampling of its Aries 6 PCIe retimers in February 2024 and the company confirmed that by now virtually all large hyperscale CSPs and server makers have received samples of this product. AsteraLabs says that it has already tested its Aries 6 retimbers with with 50+ root-complex/end-points in its cloud-scale interop labto ensure that they work properly albeit at PCIe Gen5 speeds as there is no PCIe Gen6 hardware in volume production today.

“We have shipped samples to hyperscale CSPs, we [are shipping samples] to all the major OEMs,” said Danesh. “Of course, there are ODMs, and the contract manufacturers that I’ve worked with as well. So, we really do span across the datacenter, as well as what feeds into ultimately, the tier two and tier three datacenter, which is a lot of OEMs as well.”

Astera Labs believes that Aries 6 will initially be used for AI servers in 2025, then will be adopted for ultra-high-end general-purpose servers, and then for advanced storage servers sometime in 2026. Over time usage of PCIe Gen6 retimers is going to increase as more mainstream applications adopt this interconnection, though this is going to happen in the second half of the decade.